Introduction

This article provides an overview of SASL authentication with Kafka. An accompanying Spring Boot application demonstrates the configuration required to enable a producer and consumer to connect to Kafka with this authentication mechanism. The flow is also tested with Testcontainers and the Component Test Framework using SASL authentication.

The source code for the Spring Boot application is here.

SASL

The Simple Authentication And Security Layer (SASL) is one of the technologies (along with TLS, also referred to as SSL) that underpin the security protocols supported by Kafka. The SASL framework can be used by a number of different mechanisms to provide authentication of the client (that is, a consumer or a producer). SASL can be used with PLAINTEXT where requests are not encrypted, and TLS/SSL where encryption is enabled.

Kafka supports a number of SASL mechanisms including PLAIN, GSSAPI and SCRAM_SHA-512. This can be applied to clients, inter-broker connections, and broker to Zookeeper calls. The selected mechanism in each case determines the sequence and format of server challenges and client responses performed during the authentication flow. The server must support the selected SASL mechanism by the client, otherwise the attempt to authenticate will be rejected.

The client sends a username and password to the server, which is verified by the server against its password store over a series of challenges and responses. Once there are no further challenges then the secure connection is created.

By default, the password store is the Kafka JAAS configuration. So long as the username/password exists in the store then the client can be successfully authenticated.

In this demonstration passwords are stored and transmitted on the network in plain text. These must be encrypted in a Production setting. Likewise, in Production, the password store is likely to be a secured third party password server.

Spring Boot Demo Application

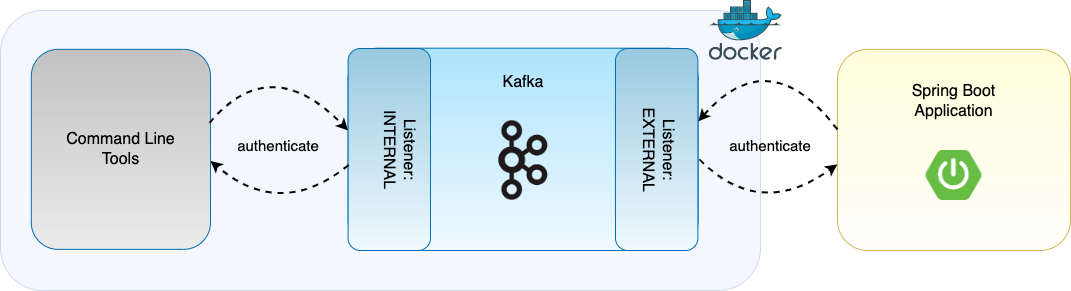

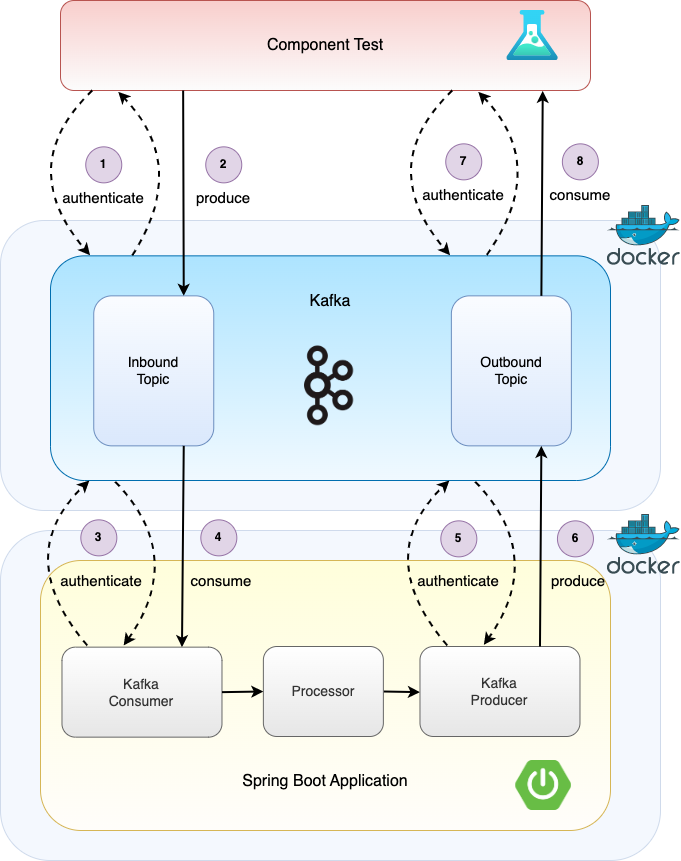

The accompanying Spring Boot application has a consumer that consumes events from an inbound topic, emitting an event for each to an outbound topic. The consumer and producer therefore must authenticate with the broker in order to connect. The application demonstrates the configuration and usage of the SASL PLAIN authentication mechanism. To trigger the flow the Kafka command line tools are used to first produce an event to the inbound topic (that the application consumes from), and then consume the resulting outbound event that the application has emitted. The console producer and consumer must also authenticate with the broker using the SASL PLAIN authentication mechanism.

Server Configuration

The server configuration refers to the configuration of the broker. In order to enable SASL PLAIN authentication, the Kafka broker must be configured appropriately. For the Spring Boot demonstration, the application connects to a dockerised instance of Kafka, so Kafka is configured in the docker-compose.yml file, which is used to bring up the Docker containers. In this demonstration, the environment variables concerned with SASL are the following:

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: INTERNAL:SASL_PLAINTEXT,EXTERNAL:SASL_PLAINTEXT

KAFKA_ADVERTISED_LISTENERS: INTERNAL://kafka:29092,EXTERNAL://localhost:9092

KAFKA_LISTENER_NAME_INTERNAL_SASL_ENABLED_MECHANISMS: PLAIN

KAFKA_LISTENER_NAME_EXTERNAL_SASL_ENABLED_MECHANISMS: PLAIN

KAFKA_SASL_MECHANISM_INTER_BROKER_PROTOCOL: PLAIN

KAFKA_LISTENER_NAME_INTERNAL_PLAIN_SASL_JAAS_CONFIG: |

org.apache.kafka.common.security.plain.PlainLoginModule required \

username="demo" \

password="demo-password" \

user_demo="demo-password";

KAFKA_LISTENER_NAME_EXTERNAL_PLAIN_SASL_JAAS_CONFIG: |

org.apache.kafka.common.security.plain.PlainLoginModule required \

username="demo" \

password="demo-password" \

user_demo="demo-password";

KAFKA_INTER_BROKER_LISTENER_NAME: INTERNAL

Any number of listeners can be configured. Here we have listeners for INTERNAL and EXTERNAL. These names reflect clients connecting from within the Docker network (internal clients), and those from outside the network (external clients) - however they could be named in any manner.

KAFKA_ADVERTISED_LISTENERS: INTERNAL://kafka:29092,EXTERNAL://localhost:9092

In the case of the Spring Boot application, if this is run from the commandline as a standalone application (i.e. not from within a docker network) it will connect to Kafka via the external listener, and if run from within a docker container in the same docker network, via the internal listener. In the demo the application is not running within a docker container, so connects via the EXTERNAL listener. The Kafka command line tools run from within the docker container, so connect via the INTERNAL listener:

The security protocol map then defines the mechanism to use for connecting on the defined listeners. In this example SASL authentication is configured for both internal and external connections, both of which are demonstrated here:

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: INTERNAL:SASL_PLAINTEXT,EXTERNAL:SASL_PLAINTEXT

Clients can decide the SASL mechanism to use for their connection, be it PLAIN or GSSAPI for example, but are restricted to the enabled mechanisms for the listener. In this case only PLAIN is enabled for both:

KAFKA_LISTENER_NAME_INTERNAL_SASL_ENABLED_MECHANISMS: PLAIN

KAFKA_LISTENER_NAME_EXTERNAL_SASL_ENABLED_MECHANISMS: PLAIN

Note that the environment property contains the name of the listener in each case, so if the listener was instead named SECURED, then the property would be named KAFKA_LISTENER_NAME_SECURED_SASL_ENABLED_MECHANISMS.

The JAAS configuration for the two listeners are also defined here, although this would typically be defined in a secure password store. In each case a user demo can connect with password demo-password.

The demonstration uses the Kafka command line tools to produce and consume events which are part of the Kafka installation and so are available on the Kafka docker container. These then connect to Kafka via the internal listener, and must be configured to authenticate via SASL PLAIN. The properties they use are defined in the file client.properties in the kafka-client-config directory, and this is bound to the Kafka volume in the docker-compose.yml file, so is available to the CLI tools in the container.

volumes:

- type: bind

source: "./kafka-client-config"

target: /kafka-client-config

read_only: true

Client Configuration

Spring Boot Application Client

The Spring Boot Kafka components, the consumer and producer, are configured to connect to the broker using SASL PLAIN in the Spring Configuration class, KafkaDemoConfiguation. This class contains the Spring bean definitions that are loaded into the Spring application context when the application is started.

In this demo the configuration for the producer and consumer are the same, so the ProducerFactory and ConsumerFactory beans have the following properties:

config.put(CommonClientConfigs.SECURITY_PROTOCOL_CONFIG, "SASL_PLAINTEXT");

config.put(SaslConfigs.SASL_MECHANISM, "PLAIN");

config.put(SaslConfigs.SASL_JAAS_CONFIG, String.format(

"%s required username=\"%s\" password=\"%s\";", PlainLoginModule.class.getName(), username, password

));

As previously mentioned, the security protocol is configured as SASL_PLAINTEXT - the transport layer in use here is therefore PLAINTEXT, so there is no encryption of data being passed on the network. By changing to SASL_SSL then encryption is supported.

The SASL mechanism is configured as PLAIN, so the application Kafka producer and consumer attempt to authenticate with the Kafka broker using SASL PLAIN with the provided JAAS configuration. This is populated via the application.ymlfile:

kafka:

sasl:

enabled: true

username: demo

password: demo-password

The addition of the kafka.sasl.enabled boolean property is used to toggle whether to apply the SASL configuration or not. By setting to false then it is not applied, which enables integration testing the application with the Spring Kafka embedded broker (covered below).

Command Line Tools Client

The kafka-console-producer and kafka-console-consumer Kafka command line tools also authenticate with the broker using SASL PLAIN. This configuration mirrors that of the Spring Boot application client configuration. It is supplied in the configuration file, client.properties, and is bound to the Kafka docker container so that the CLI tools can be executed with this configuration:

security.protocol=SASL_PLAINTEXT

sasl.mechanism=PLAIN

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="demo" password="demo-password";Running The Demo

To run the demo the docker containers for Kafka and Zookeeper are first started, then the Spring Boot application is built and run:

docker-compose up -d

mvn clean install

java -jar target/kafka-sasl-plain-1.0.0.jar

An event is then written to the application's inbound-topic, using the kafka-console-producer command line tool. This executes from within the Kafka docker container. The command includes the SASL configuration contained in the client.properties file:

docker exec -it kafka kafka-console-producer \

--topic demo-inbound-topic \

--bootstrap-server kafka:29092 \

--producer.config /kafka-client-config/client.properties

The event for the inbound-topic is a JSON event that just takes a sequenceNumber, so the following is entered at the command prompt:

{"sequenceNumber": "1"}

The application consumes this event and emits a corresponding event to the outbound-topic containing this same payload. This is then consumed using the kafka-console-consumer command as follows:

docker exec -it kafka kafka-console-consumer \

--topic demo-outbound-topic \

--bootstrap-server kafka:29092 \

--consumer.config /kafka-client-config/client.properties \

--from-beginning

This outputs the consumed event, completing the round trip. The Spring Boot Kafka components (consumer and producer), and the Kafka console consumer and producer have all successfully authenticated with the broker using SASL PLAIN.

Integration Testing

Spring Kafka provides an EmbeddedKafka class, an in-memory Kafka broker that can be used with Spring Boot tests to verify that the application can successfully read and write messages from Kafka. However this in-memory broker does not support authenticating clients. Therefore in order to test the flow SASL has to be disabled for the tests. This is done by overriding the kafka.sasl.enabled property in the application-test.yml property file:

kafka:

sasl:

enabled: false

The Spring application Configuration class KafkaDemoConfiguation does not set up the SASL configuration based on this toggle for the tests.

While this means the rest of the application integration with Kafka can be tested, (an example integration test is provided in EndToEndIntegrationTest), component tests and/or system integration tests are required with a real Kafka instance for testing the authentication flow.

Component Testing

The Testcontainers library enables bringing up resources such as Kafka in docker containers that the application integrates with for testing. This can be orchestrated via Lydtech's open source Component Test Framework. By annotating a JUnit test with the extension ComponentTestExtension and defining the required configuration in the maven pom.xml or gradle.properties, the test first brings up Kafka, Zookeeper and the Spring Boot application in their own docker containers before interacting with the system, treating it as a black box.

The demo contains an example component test: EndToEndCT.

The SASL PLAIN authentication is enabled and the username and password to use are configured in the maven-surefire-plugin declared in the component profile of pom.xml:

<kafka.sasl.plain.enabled>true</kafka.sasl.plain.enabled>

<kafka.sasl.plain.username>demo</kafka.sasl.plain.username>

<kafka.sasl.plain.password>demo-password</kafka.sasl.plain.password>

The test is run from the commandline. First the application is built and the docker image for the application is created, then the maven test target is executed with the component profile:

mvn clean install

docker build -t ct/kafka-sasl-plain:latest .

mvn test -Pcomponent

Similiar to the demo walkthrough described above, in this case it is the responsibility of the test rather than the command line tools for sending an event to the application's inbound topic, and then consuming and asserting on the outbound event emitted by the application. As well as the Spring Boot application Kafka clients authenticating with the Kafka server, the Component Test Framework test consumer and producer likewise authenticate using SASL PLAIN. Component testing therefore ensures that a repeatable and automatable test exercises the authentication aspect of the end to end flow.

Summary

Kafka listeners that are responsible for accepting client connections can be configured to require the client to first authenticate via a number of different security protocols. The selected protocol determines the level of authentication and encryption. This article provided an overview of SASL PLAIN, and stepped through securing a Spring Boot application with this authentication mechanism. A full suite of tests for an application must include testing this authentication aspect, and this is demonstrated using Testcontainers with the Component Test Framework.

Source Code

The source code for the accompanying Spring Boot demo application is available here:

https://github.com/lydtechconsulting/kafka-sasl-plain/tree/v1.0.0

More On Authentication

Securing Kafka with Mutual TLS and ACLs: Securing a Kafka installation using a combination of mutual TLS and ACLs.

Authentication and Authorisation using OIDC and OAuth 2: An introduction into the world of Authentication and Authorisation.

[cta-kafka-course]

References

- Kafka - The Definitive Guide (Shapira, Palino, Sivaram)

- Kafka Listeners – Explained (Robin Moffatt)

- An Introduction to Java SASL (Kumar Chandrakant)