Rob Golder - October 2021

Introduction

In the first part of this article the different deduplication patterns are described and the various duplicates that can occur are called out. In this part each of the failure scenarios are drawn out in detail to show how and where duplicates occur.

Diagramming The Failures

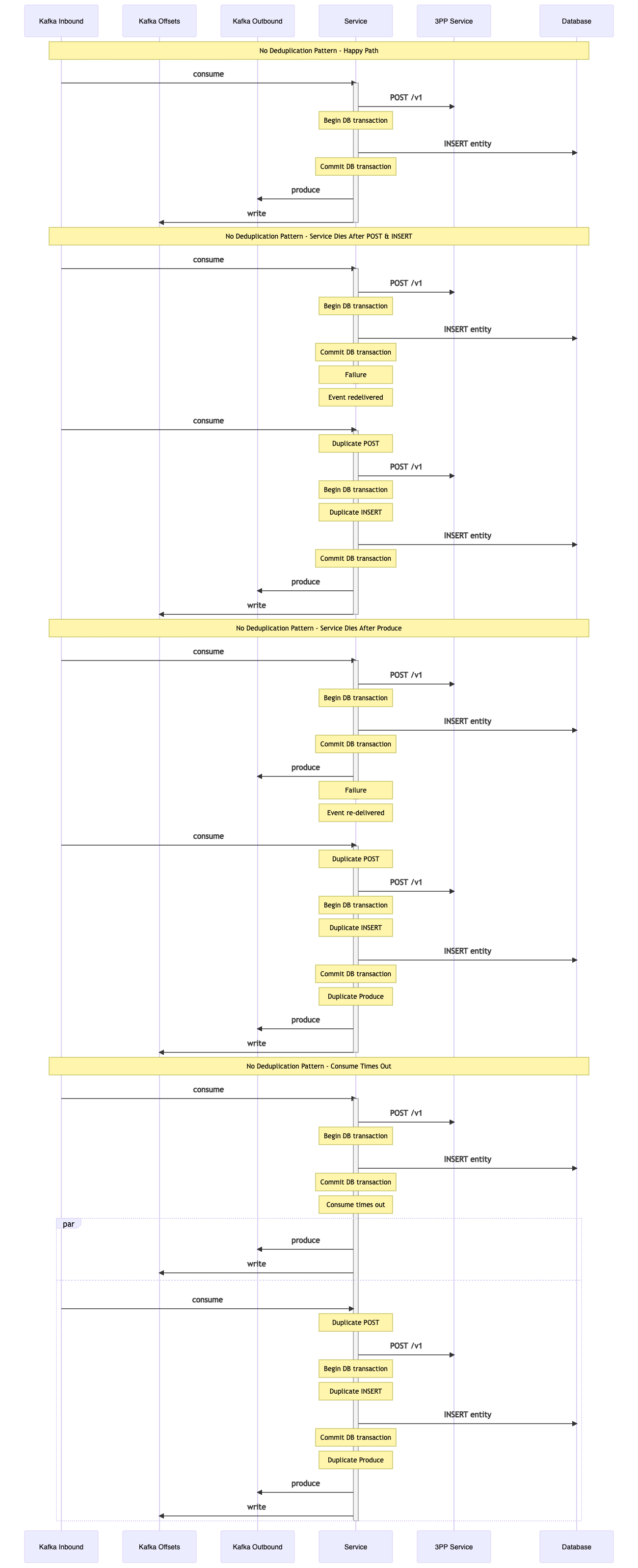

No Deduplication Pattern

The basic flow that performs a REST POST request, inserts an entity in the database, and produces a resulting event, with no deduplication patterns in place.

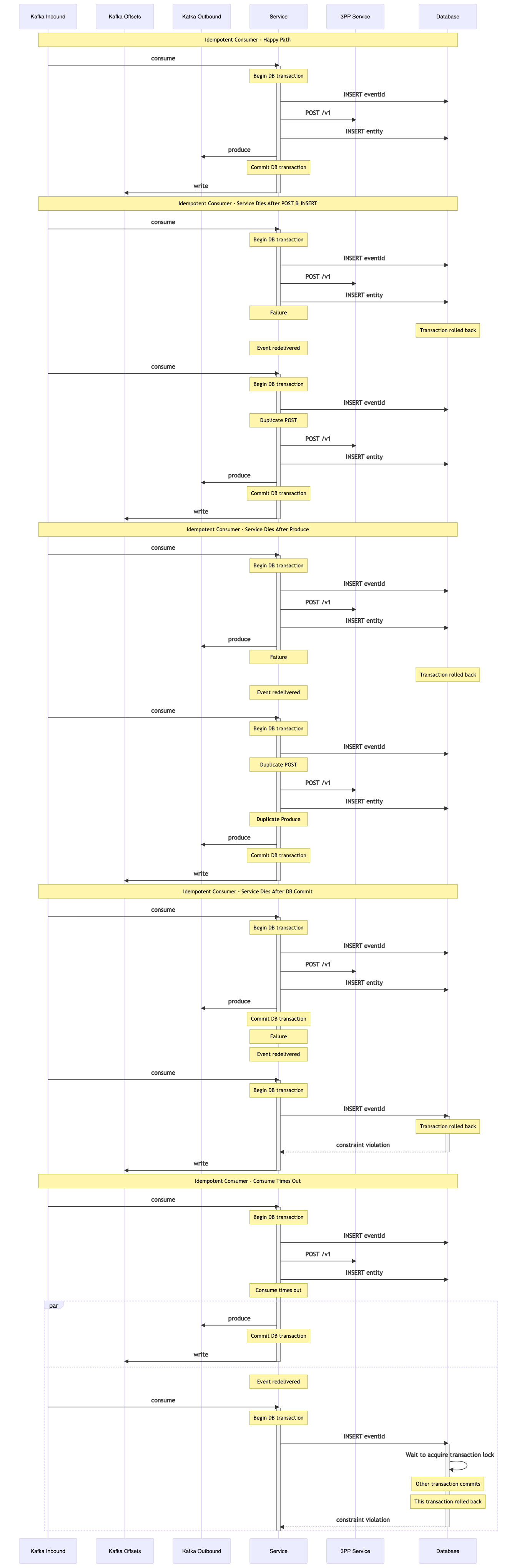

Idempotent Consumer

The DB transaction must wrap the bulk of the processing, starting with inserting the eventId, and ending after the outbound event is produced. The consumer offsets update must be the last action so that any failure results in a redelivery.

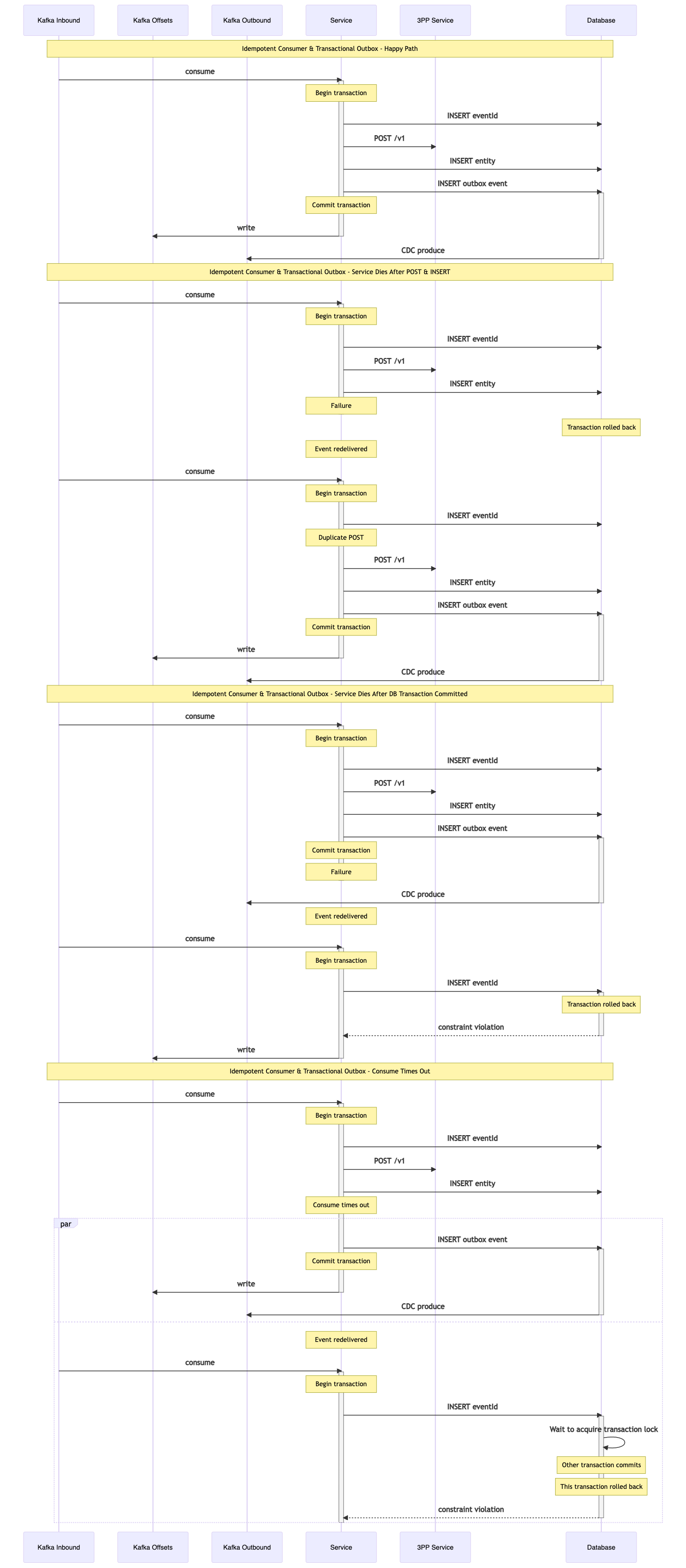

Transactional Outbox

The DB transaction wraps any entity writes and the insert of the outbox event. CDC results in the event being produced to the outbound topic.

Idempotent Consumer And Transactional Outbox

Combining the Idempotent Consumer and Transactional Outbox patterns. The event deduplication, any entity writes, and the outbox event write that results in the event being produced to the outbound topic are atomic.

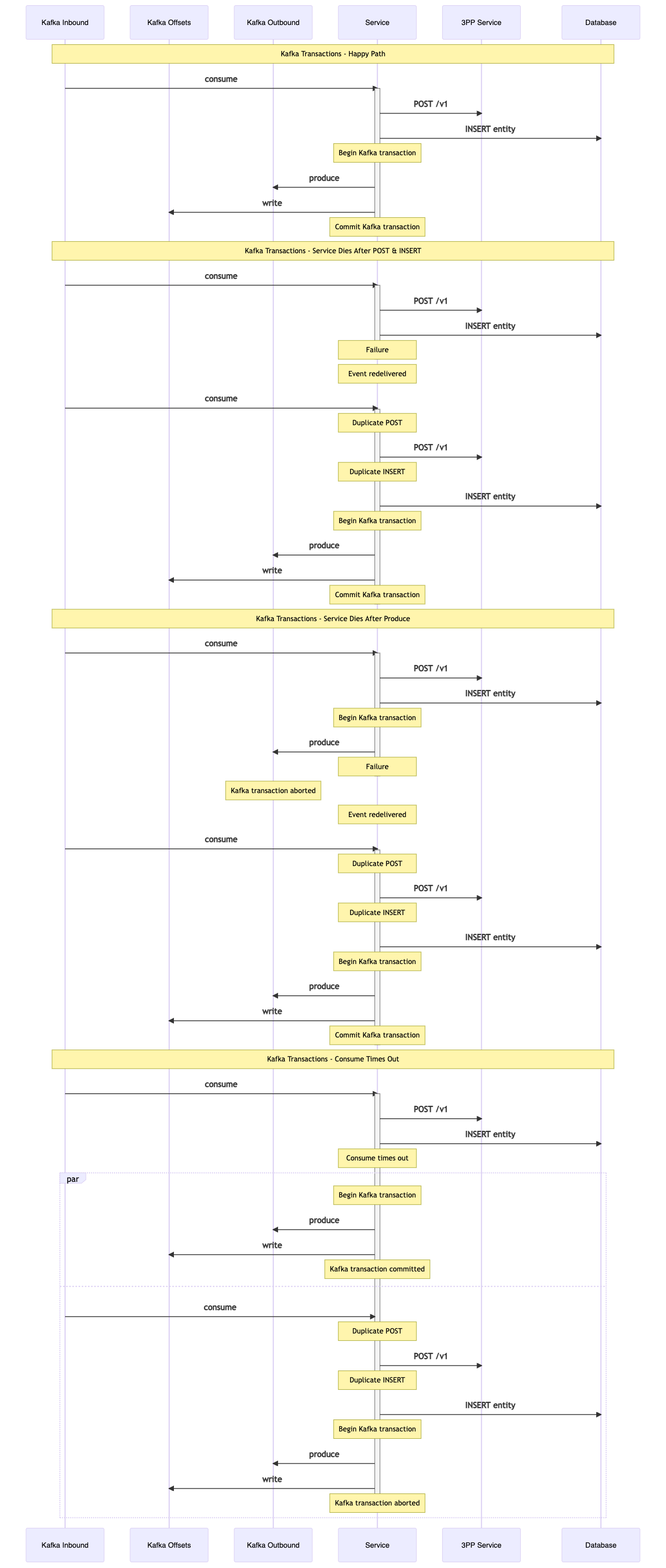

Kafka Transactions

With Kafka Transactions the publish of the outbound event and the consumer offsets update are atomic.

In the failure scenario where the event is produced to the topic but the transaction is aborted, the write is successful, however the event is not marked as committed on the transaction log. Consumers of this topic need to be configured as READ_COMMITTED to ensure they do not consume events that have not been successfully marked as committed.

Appendix: Avoiding Data Loss

Not all patterns can be combined, and the patterns that are applied impact the order that the actions can happen in order to ensure that no data (event or database write) can be lost. This section illustrates a number of flows showing how data loss could occur for these situations.

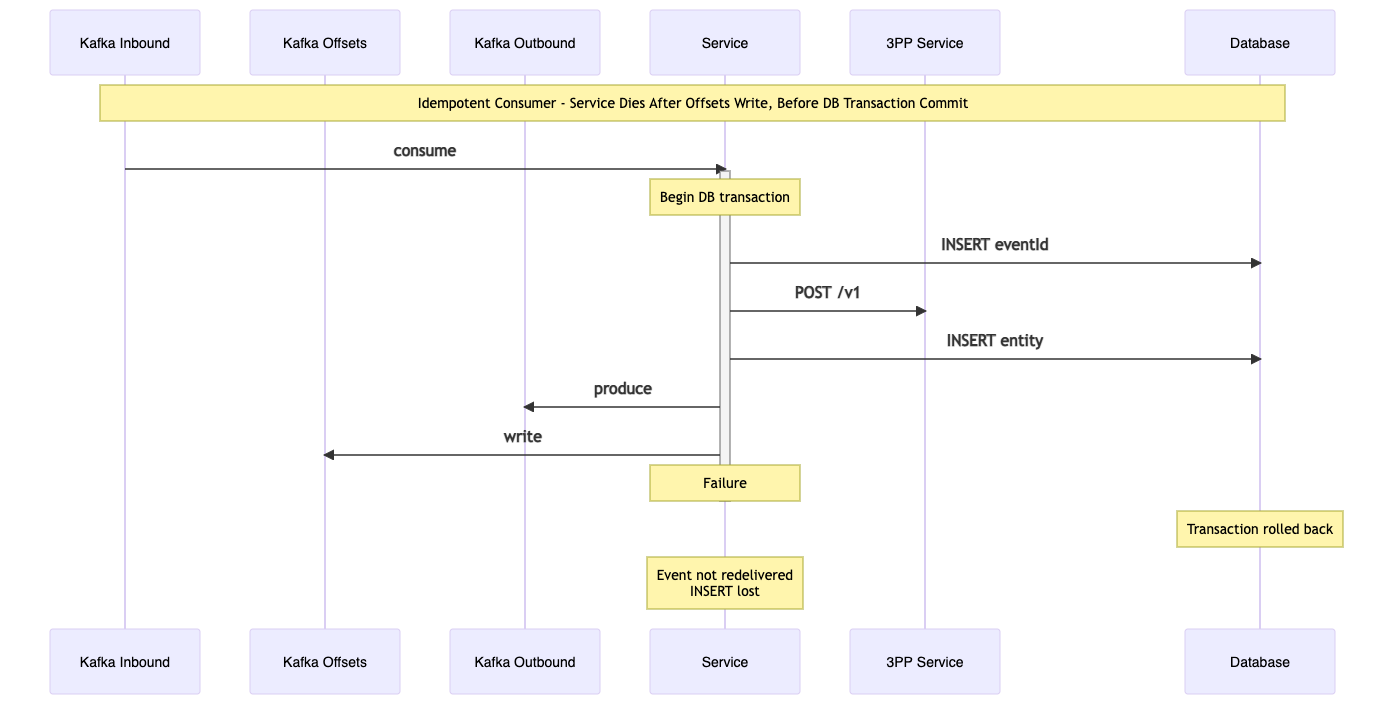

Idempotent Consumer

With the Idempotent Consumer pattern the database transaction must follow the event publish. If it precedes it, and the service fails before the database transaction is committed, then the database writes will be lost as the event has been marked as consumed and will not be redelivered.

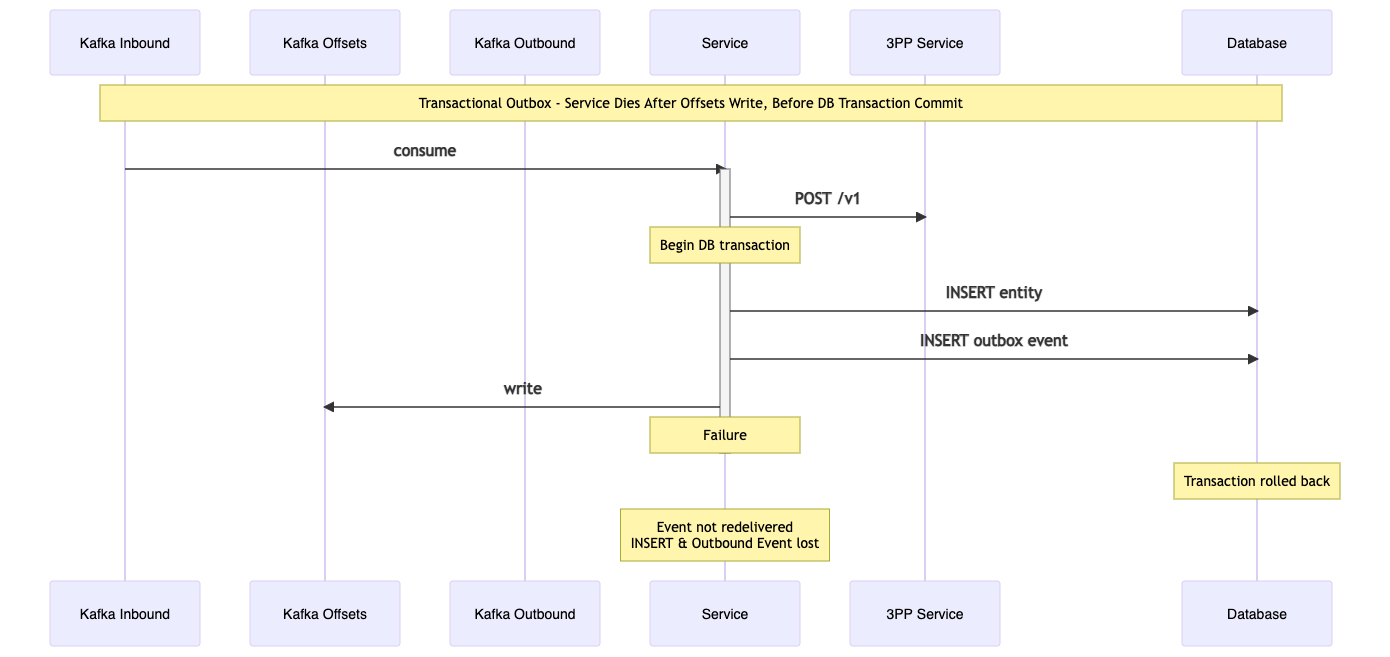

Transactional Outbox

With the Transactional Outbox pattern the database transaction must precede the consumer offsets write. If the consumer offsets are updated, marking the event as consumed, and the service dies before the database transaction is committed, then the event will not be redelivered and the database writes and outbound event publish will be lost.

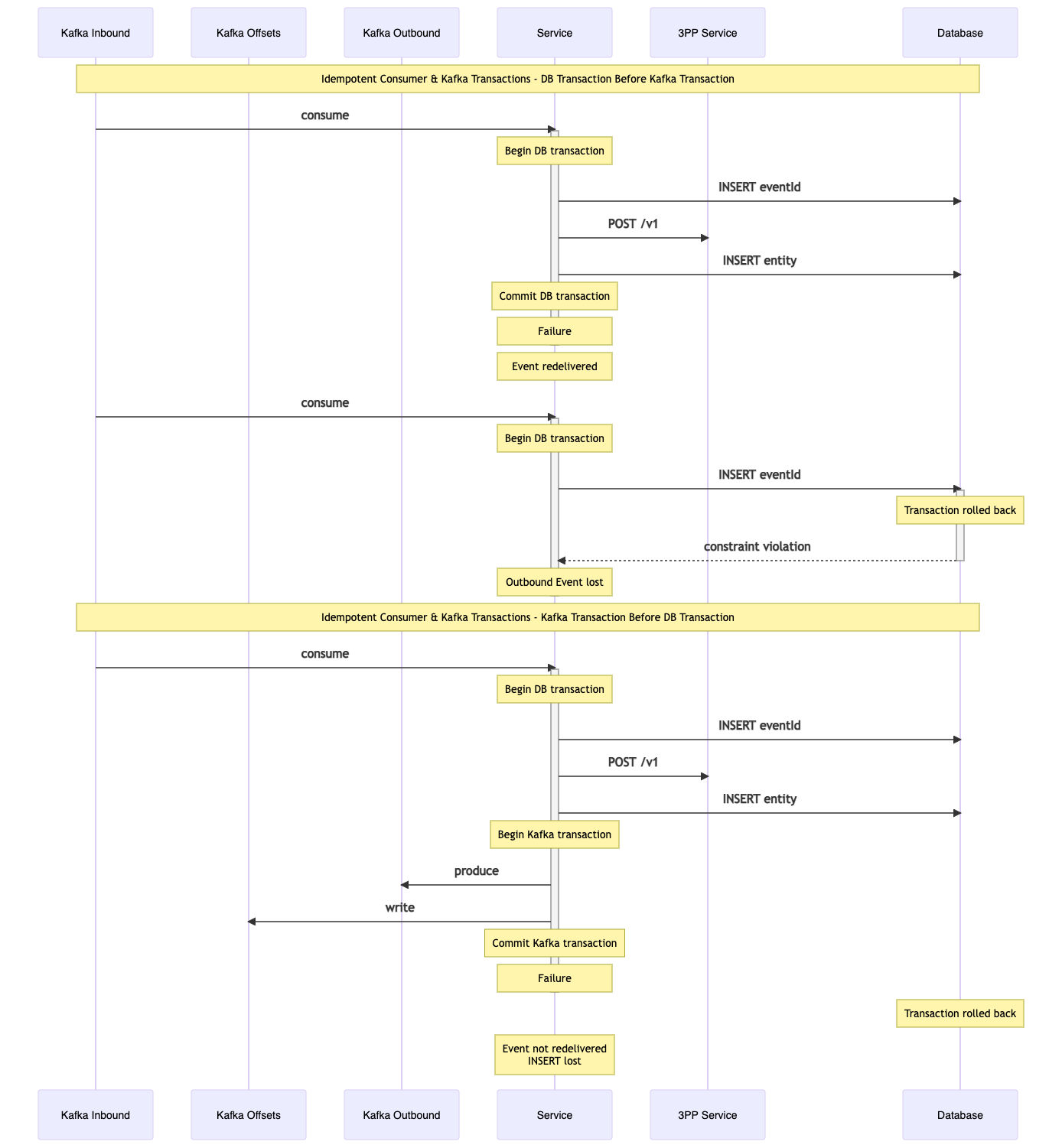

Idempotent Consumer And Kafka Transactions

It is not possible to combine the Idempotent Consumer and Kafka Transactions patterns without risking data loss in certain failure scenarios as the following diagram illustrates.

If the database transaction commit precedes the Kafka transaction commit, and the service fails before the Kafka transaction is committed, then when the event is redelivered it will be deduplicated by the Idempotent Consumer. This means the resulting outbound event will never be published.

If the Kafka transaction commit precedes the database transaction commit, and the service fails before the database transaction is committed, then the event will not be redelivered as the Kafka transaction has updated the consumer offsets. This means any database writes will be lost.

View this article on our Medium Publication.