Introduction

Managing duplicate events is an inevitable aspect of distributed messaging using Kafka. This article looks at the patterns that can be applied in order to deduplicate these events.

Duplicate Events

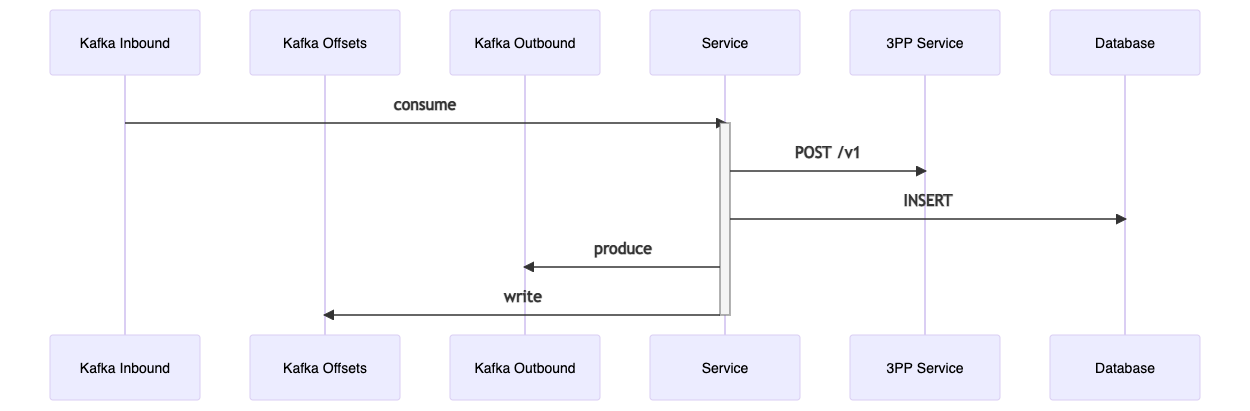

Consider the following flow where a message is consumed from a topic, triggering a REST POST call to a third party service, a database INSERT to create a new record, and the publishing of a resulting event to an outbound topic.

1. Service consumes a message from Kafka.

2. Service makes a REST POST request to a third party service.

3. Service INSERTs a record in the database.

4. Service publishes a resulting event to Kafka.

A failure could happen at any stage in this flow. The flow must be implemented to not mark the message as consumed until the processing has completed. This ensures that upon failure the message will remain on the topic and be polled by another consumer in the consumer group. The deduplication patterns that are applied will then determine what duplicates will occur, whether a duplicate POST, duplicate database INSERT, or duplicate outbound event.

Deduplication Patterns

Patterns that cater for duplicate messages:

1. Idempotent Consumer Pattern

- Track received message IDs in the database.

- Use a locking flush strategy to stop duplicates being processed until message ID is committed or rolled back.

- Upon successful commit any duplicate awaiting lock is discarded.

- Message ID commit happens in the same transaction as any other database writes, making these actions atomic.

2. Transactional Outbox - messages published to outbox table with CDC

- Outbound message committed to the outbox table in DB in the same transaction as any other database writes, making these actions atomic.

- Kafka Connect CDC (change data capture) publishes messages to Kafka outbound topic.

3. Kafka Transaction API - for exactly-once delivery semantics

- Kafka’s exactly-once semantics guarantees that the three consume, process and produce steps will happen exactly once.

- It is important to understand that the consume and process steps could however happen multiple times.

- Uses the transactional log as a centralised, single source of truth for all ongoing transactions, guaranteeing it is atomic.

It is also possible to combine the Idempotent Consumer pattern with the Transactional Outbox pattern.

The Database transactions and Kafka transactions cannot be committed atomically. While a chained transaction manager can be used to commit these using a two-phase commit, this does not guarantee both transactions will complete atomically, and resources can be left in an inconsistent state. This means it is not possible to combine the Idempotent Consumer pattern with Kafka Transactions pattern without risking data loss.

Applying The Patterns

This sequence diagram describes the flow illustrated at the top of this article.

Only once the resulting event is published is the consumer offsets Kafka topic written to. This marks the message as consumed, and it will not be re-polled by another consumer in the same consumer group. If the consumer offsets are not written due to a preceding failure, then the message will be re-polled.

The following table summaries what duplicate actions (POST request, database INSERT, outbound event PRODUCE) can occur at different failure points based on which patterns have been applied.

Some scenarios are not applicable to every pattern as the failure points can differ based on what patterns are in place. Each scenario for each pattern is diagrammed in the second part of this article.

The 'Consume Times Out' scenario refers to where a consumer event poll does not complete before the timeout, so the message is redelivered to a second consumer instance as the Kafka Broker believes the original consumer may have died.

Recommendations

Implementing the Idempotent Consumer and Transactional Outbox patterns together is the recommended approach to minimise duplicate actions occurring. The only risk here is a duplicate POST occurring. While the same is true when combining the Idempotent Consumer with Kafka Transactions, there are two transactional managers in play here, meaning more complexity, more things to go wrong, and more testing needed.

For each of these approaches there is nothing that can be done to completely remove the chance of a duplicate POST. It is therefore important to ensure that that call is itself idempotent, with the third party service dealing with that idempotent requirement.

Next...

In the second part of this article the different deduplication patterns are drawn out in detail for each failure scenario to show how and where duplicates occur.