Introduction

Engaging in any IT project always brings with it a myriad of challenges. Every project is different, but whether starting on a new greenfield project in the inception phase or joining a project to enhance a well established system, there are a number of key principles that are often overlooked but should be applied to all. These are: keep it simple, draw it out, and test it early.

Keep IT Simple

Keep it simple applies throughout all aspects of a project, from selecting a tech stack, to the architecture, the design and the implementation. When it comes to technology choices, boring is often better. A dependable SQL database such as Postgres, a mature and well understood programming language like Java should always win out unless there are really good reasons to opt for alternatives. Bleeding edge tech can be enticing, and appealing to be added to the CV, but as a project evolves these choices can come back to bite in the form of unexpected complexities or unwanted behaviours. Hiring developers for ‘latest and greatest' tech can prove harder and more costly too, as the resource pool will typically be smaller particularly for experienced resources.

As Dan McKinley writes in his blog Choose Boring Technology:

Let's say every company gets about three innovation tokens. You can spend these however you want, but the supply is fixed for a long while .... If you choose to write your website in NodeJS, you just spent one of your innovation tokens. If you choose to use MongoDB, you just spent one of your innovation tokens. If you choose to use service discovery tech that's existed for a year or less, you just spent one of your innovation tokens.

As this alludes, a common mistake is to throw too many technologies into a tech stack. Every addition to an architecture adds new failure points, new edge cases to cater for, more testing, more complexity when deploying, and the need for more expertise in the team that understands it comprehensively. Again, there may be good reasons to add the technology, but can the requirements be met with a simpler approach? This equally applies to frameworks and libraries for the chosen language. In each case a careful evaluation should be undertaken. Is the technology or the framework mature, battle hardened, fit for purpose and well supported? Is it really needed, or is there a simpler choice?

Try not to over-engineer a system, it becomes harder to understand, harder to maintain, and harder to evolve. Beware of the temptation to prematurely optimise a system. Is there a need to achieve high scalability or fast start up times from day one? This will lead down the road of more complexity and more delays, when the basic use cases should be addressed first. Inevitably at times there will be problems and challenges that are difficult to solve. Break these down into smaller and well understood pieces which can then be tackled and tested in turn.

Draw IT Out

It is all too common for a team to jump into the development of a new software feature, or design a new CI pipeline, or evolve the architecture of a system without drawing these out. However, it is important to first draw out the business problem so that this is clearly understood. Then to carefully diagram the designs that are to be implemented. Diagrams often tease out more questions that need answering which otherwise can trip up the work once it is well underway, costing time and effort to solve. Diagrams are also a great tool for determining how and where work can be parallelised.

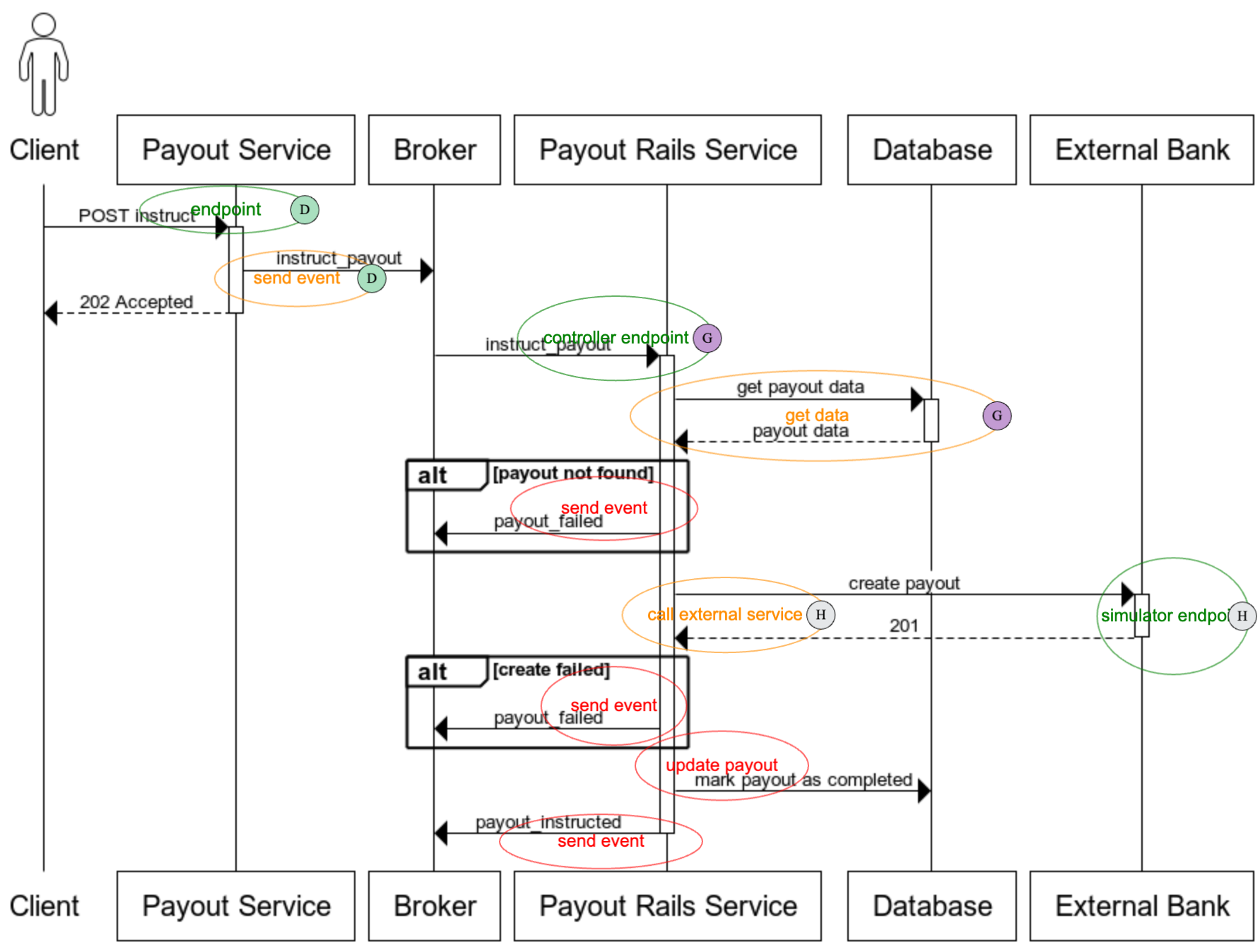

For example, when a development team is tasked with putting together a new feature, the tasks are created and the team dive into the implementation, they can often find themselves overlapping areas of implementation, perhaps duplicating effort. Or one developer might find themselves blocked as they had not realised how they depended on another developer's task being completed before they could efficiently work on theirs. By clearly drawing out such a new feature with sequence diagrams and using a tool like Ovalview, tasks can be circled (well, “ovalled" in this case) making it clear where tasks can be picked up in parallel, and where dependencies on other tasks might exist. Such tasks may map one to one with tasks listed under a story in Jira, but that list in Jira gives no indication as to the order that they should be picked up or what can be worked on in parallel.

Just as importantly, diagrams provide an excellent communication tool. Whenever talking about a new feature or new system, being able to talk to a diagram is really essential to be confident that the team are all on the same page. For feature team development, it is recommended to use the diagram as the backdrop to daily standups. Everyone attending can then clearly understand the context of each task being discussed as the team members give their updates, as they have the visual aid of the diagram. This is particularly true for new members of the team, helping to get them up to speed.

Diagrams should be kept close to the code, checking into source control so that changes and revisions are saved and versioned along with the code. For consistency ensure one diagramming tool is used by the team or organisation, be it Mermaid, Omnigraffle, draw.io and so on. Just as important as creating the diagrams is to ensure that as part of the development process they are kept up to date with any changes. This should become part of the Definition of Done for a task or story.

Diagrams are the best way for someone to learn a new part of the software, or what the architecture of the software that they are dealing with is. They provide documentation of the system in their own right. There is nothing more unhelpful than when a relevant diagram to the area of their interest is found only to then discover it has not been kept up to date. Diagrams can also be surfaced on the internal wiki providing quick and easy access, although keeping these up to date requires good team discipline. These diagrams do not need to be, and typically should not be, rigorous UML artefacts. Keeping them simple aids their readability, maintainability, and ease of understanding.

Test IT Early

Comprehensive and effective testing is the cornerstone of system development, be it testing a new process or testing new software. Testing needs to be baked into the development process as a key upfront consideration. With the pressure to hit deadlines corners can be cut, and all too often one of the corners cut is testing. This can be particularly true early on in a project, but this is a mistake which only leads to poor quality software, bugs, and technical debt. Test it early means getting the right tests in place from the outset.

Developing a system or process that is hard to test means that there will be problems down the line costing time and effort to resolve, and this will impact deadlines and maybe stall a project. This ties back to choosing the right technologies to build a system with. If they do not lend themselves to ease of testing then the negative consequences of this will become apparent over time.

A common trap to fall into is favouring quantity over quality of tests. Code coverage tools such as Cobertura and SonarQube enforce a percentage threshold of code that must be covered by tests. Achieving a high percentage of coverage does not equate to a high quality of tests, so these tools are not the be all and end all. Writing good tests with clear assertions that prove a test is achieving its goal comes with experience, and techniques such as Test Driven Development can help.

Importantly, tests need to be written at different levels of granularity, from the finest grained unit tests, through integration tests, and the coarsest system integration tests. These tests should be automated, so can be both run by the developer/tester on their machine as well as in the CI pipeline when code is committed. Unit tests are the fastest to run, live closest to the code, and so give fast feedback to the developer. However they do not validate that the different units of work integrate correctly with each other. Integration tests, which commonly use in-memory versions of resources such as databases and brokers, can validate these integrations.

End to end (system) tests prove deployment configurations, and integration with real versions of these resources. The feedback to the developer on these coarser grained tests is slower as they take longer to run. So the principle of shifting left is key here: test as much as possible closer to the code for quicker test runs and faster feedback. This gives rise to the notion of the Test Pyramid, coined by Mike Cohn and primarily associated with Martin Fowler, with the most tests being the finest grained unit tests at the bottom of the pyramid, and the coarsest at the top.

With any kind of distributed system such as a microservice architecture, it is important to employ contract testing to validate that services can communicate with each other as expected. A contract specifies the expected behaviour of a service, so this testing ensures that interactions between services conform to these predefined contracts. Similarly it may be important to implement performance tests, resilience tests and load tests as a system matures, to ensure it meets its non-functional requirements, and indeed does not degrade over time. Processes such as disaster recovery and pipeline configurations, if applicable, all need to be carefully tested too.

Test early and comprehensively to avoid problems that will otherwise undoubtedly cost time and effort down the line.

Summary

Keep it simple, draw it out, test it early - these are three key principles that should be applied to every IT project with the goal of achieving a successful outcome. Without these there is a high risk of the build up of problems that will negatively impact the project further down the line, hitting deadlines and costing time and effort to address.

Resources

- Should You Draw It? (Yes You Should!): The importance of diagramming, and how Ovalview can help.

- Testability Is An Architectural Choice: Bringing testing concerns to the heart of architectural choices.

- Balancing The Test Automation Pyramid: Understanding how to apply the test pyramid.

- Choose Boring Technology: Article by Dan McKinley.

- Test Pyramid: Article by Martin Fowler.