Dan Edwards - January 2022

Overview

Ask 10 people what a software architect is and you'll get 10 different answers. For me, I see the software architect position as a role that requires as much management as it does technical expertise. Engaging with stakeholders, and technical specialists, to determine what is actually demanded of the platform, and identifying the best way to meet these needs.

Only once the problem is understood then do we look to identify which technologies we can employ to solve the problem (not the other way around as is too frequently the case). There are many elements to consider when making technology decisions.

Testability is one of those elements of technology choice which an architect needs to consider, and one which is frequently overlooked.

What is Testability?

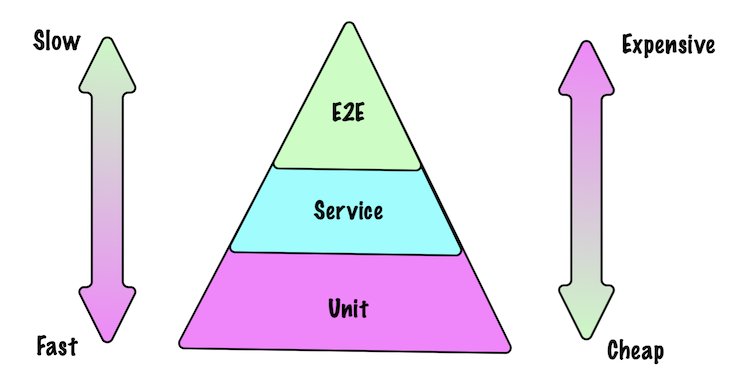

Thinking about a simplistic testing pyramid will help guide us to establish the testability of our tech choices. If you're not familiar with the pyramid and some common pitfalls with the model this is a great article: Balancing The Test Automation Pyramid.

It can be all too tempting to choose the latest and greatest cutting edge technologies to add to your tech stack, but where do these technology choices sit in the pyramid? Where do the overlaps of these technology choices sit on the pyramid? Get these choices wrong and it may become very difficult to satisfactorily test your product.

Real World Illustrations

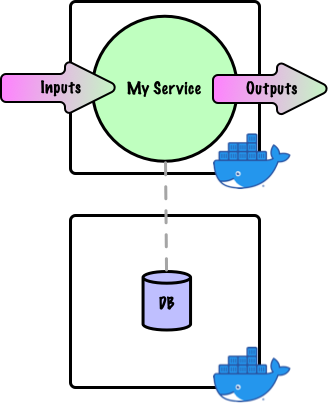

Let's look at an example. Assume your new development requires some db persistence. Do your tech choices (in this case your language and db of choice) allow for the use of an in memory db? If so, great, an in-memory db option means shorter feedback cycles and more representative testing than can be expected from mocks. However if your technology choices prevent this level of testing in memory, then you're starting to climb the pyramid, and perhaps needlessly.

Pushing left is the goal (or down in the case of our pyramid) where possible. This doesn't mean rejecting any technologies you can't test on the base of the pyramid, but it does mean that you should be aware of the trade-off and make an informed choice of the tech. That's the call the architect should be making when considering technology choices.

Our DB scenario above is valid but simplistic. Let's assume a scenario which requires that kafka is now required in our development. We have the same considerations as before, how far left can we push these tests? But now we're faced with a greater challenge. One of our greatest concerns is ensuring the integrity of the transactional hand-offs. These scenarios are certainly edge cases, but if we want to ensure the integrity of our app, then we need to replicate these scenarios, and to do so requires a tight coordination between modules which is only achievable via code. This can not be achieved further up the pyramid. Our architectural choices with this kind of setup could be preventing testing of these edge cases.

Of course, these scenarios may not be of concern to all applications. This is a call the architect must make. If they are a concern to your application then how are you going to verify the integrity? If your tech does not provide a way to finely interleave these technologies to recreate such scenarios, then you may not be able to verify these scenarios at all.

Another scenario, using serverless has been pushed as a quick win with their low running costs and scalability offerings, but if we choose serverless how easily is this tested? Unit testing isn’t typically an issue, but integration testing can be more challenging. How about if your system already has container based services, introducing serverless now adds additional complication in the form of infrastructure to your testing conundrum.

We do have options to resolve these scenarios, but first it’s worth taking a slight segue to think about Maintainability. On the Venn diagram of ‘ilities’ there’s a natural overlap of Testability and Maintainability. If a bug is detected in a system with high testability, then identifying, replicating and fixing the bug becomes a simpler undertaking. Debugging multiple services across a remote environment is not a simple undertaking.

So what are our options for validating such a system? One option: We can perform this validation within a dedicated environment, however consider the deployment time and the cost of the additional environment. This pushes our testing up the pyramid, and makes it more difficult to diagnose issues any tests uncover. A second option is we could replicate the environment locally using third party tools such as test containers and localstack. These are great tools, but we’re creeping up the pyramid again and we’re investing time and complexity into a solution that we would prefer to avoid.

Sometimes we do not have the option to swap out technologies, and we may end up with tests at a higher level in the pyramid than we’d prefer, but that’s fine, so long as we’ve moved these tests as far left as possible. But when we do get to make a call on the tech we consider the impact of these choices on our ability to provide fast feedback on the validity of our development.

Conclusion

When choosing a technology there are many criteria to be considered. How good a fit is the technology for your testing criteria should be one of those considerations. Using technologies which lend themselves to fast feedback, and fine grained integrations provides the opportunity to have high levels of test coverage and the resulting confidence which accompanies great test coverage. Failing to consider testability when choosing a technology leaves you exposed to the risk or inadequate test coverage and lower levels of confidence in your solution.

View this article on our Medium Publication.