Introduction

Whether you're about to embark on a new project or the project is already well established, introducing automated testing early will boost a project's chances of success and improve the quality of the end product. The automation of testing is a best practice which should be adopted by Developers and QA engineers.

Let's take a look as to why automation is beneficial, why retrospectively applying automation is hard, and how we can tackle applying automation retrospectively.

Testing — Establishing best practice from the outset

Why we Test

Software should be predictable and reliable. These two qualities generate higher levels of confidence in the software, and this confidence is earned because the behaviour of the software has been rigorously validated.

Manual testing is often the first foray into software testing, but manual testing is time expensive and has long feedback loops. If the test to engineer feedback loop can be reduced, an efficiency gain has been realised. Automation of the testing is the means by which to achieve this feedback.

What is Automated Testing?

We can say our tests are automated when they provide feedback that meets the defined criteria without requiring human intervention. Of course, human interaction is still required to define, build, and maintain these tests.

Automated testing isn't a synonym for quality or completeness. The levels of quality and completeness are provided by the engineers creating and extending these tests. Automation means that these tests can be executed with greater consistency and faster than the manual alternatives. Every change in the code base should be subjected to validation by the test suite, including seemingly innocuous code changes to highlight any unintended consequences. Automating your test suites allows you to perform these validations at scale.

A high quality automated test suite gives you more confidence in the changes you apply to the software, allowing you to successfully deploy new software to clients at a higher frequency.

What to Automate

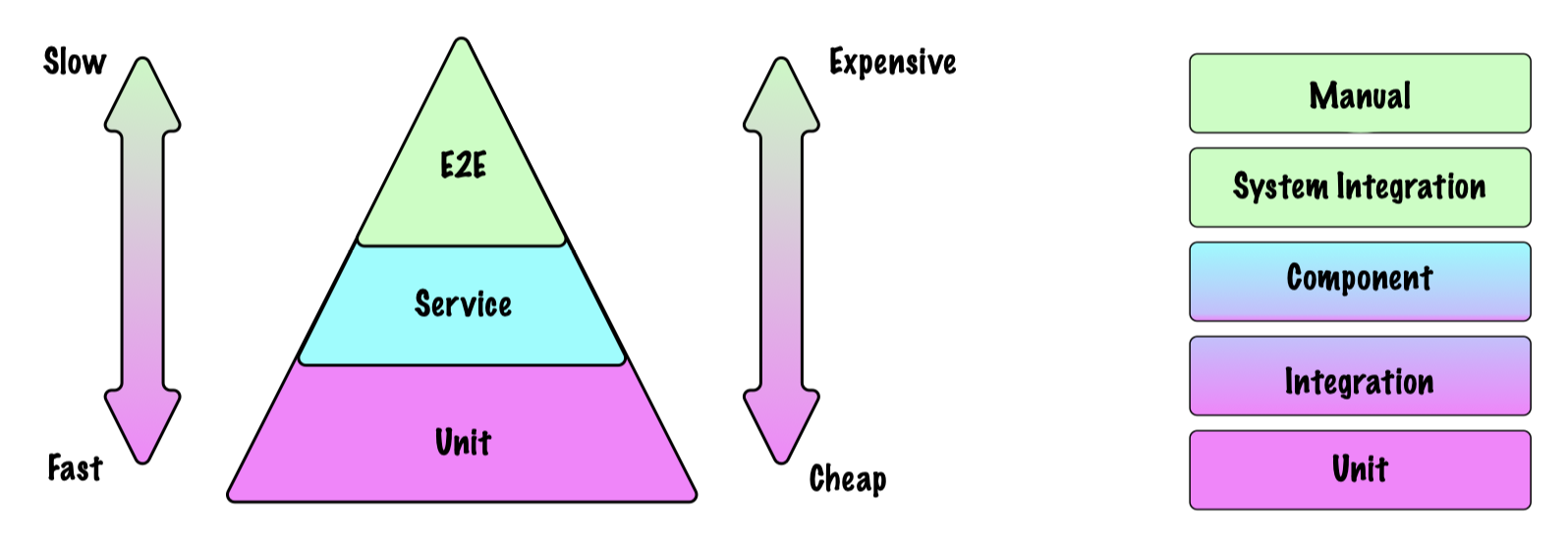

There are many different types of testing which can be applied to software projects, those typically identified by the Test Pyramid including: unit, integration, component, and system. Each of these testing layers benefits from automation. As you rise through the layers setup costs increase and the determinism decreases.

Whilst the goal should be to move testing left (this means both moving the testing of code closer to the developer, and introducing testing earlier in the project lifecycle) there is still high value in the upper levels of the test pyramid, just because a test is towards the top of the pyramid does not mean it is in any way less valuable. For a more focussed discussion on balancing the test pyramid see Balancing The Test Automation Pyramid — Matt Bailey.

Automation at each of these layers adds value, so why wouldn't we use them from the outset?

To retrospectively add testing is hard

As hindsight likes to point out, if certain considerations or actions had been taken at the start your life would be simpler. This is certainly true for automated testing. There are certainly technical issues you could have avoided if automation had been considered from the beginning, but there are often more than just technical challenges to overcome when retrospectively adding testing.

Getting buy-in

Stakeholders need to accept the new development 'cost'. This non-technical reason is often the biggest stumbling block, ultimately pitting quality against perceived speed.

To add testing retrospectively takes time. If the system is working* in production then you may face challenges getting buy-in from all stakeholders, this includes developers who are not used to writing tests — 'if its working without them, then why spend the effort?'.

- working — running in production. Clients regularly report new issues and you're running the risk of untested failure scenarios striking at any moment. This keeps things exciting, but not in a fun way.

Without the automated testing suites in place each new feature will take longer to complete. As a product grows it becomes more complex, and more complexity means more bugs.

Stakeholders may argue that users demand new features and new features are what drive the business. However, if new feature development slows to a standstill because the team is constantly being redirected to fix an increasingly fragile/buggy system, then the users will not be getting the new features. Having your test suite execute against every change will protect you from this slide into stagnation.

You can counter the 'new features over quality' argument by surfacing evidence on the cost of bugs. Some of this evidence is relatively simple to measure, such as bug turnaround — What is the time cost for the reporting, recording, implementation, and deploying a bug fix. This isn't limited to simply development time, support may also be involved, potentially product, test, devops… how many people are involved? Each time an issue arises, conduct a post-mortem exercise to capture the impact, and to identify the absence of the testing that would have caught this problem early.

Other evidence may be harder to gather but may provide a more convincing argument. Suppose the issue deters potential clients from signing up and paying for the service. This cost could be high, it's difficult to measure, and the prevention of this scenario is certainly worth the time it would have taken the engineering to implement the automated test.

Unexpected Costs

Refactoring untestable code

If the original code has not been written with testing in mind, then this code will require refactoring to make testing possible. Implementation mistakes such as: close coupling, class bloat, and high complexity (amongst others), are usually caught whilst tests are being written in parallel with the code. Doing this refactor at a later time means the engineer has less recollection of the code (if they've even seen it before) making this a more cognitively challenging task and creates the potential for new bugs to be introduced into the system during the refactor.

Discovering bugs & undefined behaviour

When taking a serious look at automating your testing you shouldn't be surprised that you may encounter areas of functionality that have not been fully elaborated, resulting in the discovery of genuine bugs in the system which have not yet surfaced in the live environment.

You may also uncover incomplete or inconsistent product behaviours, such as scenarios that contradict the current behaviour and to which there is no obvious solution. Such discoveries can lead to significant rework to the extent where it could be categorised as new work.

System Performance

An all too common mistake is to leave performance testing until the latter stages of the project. There are multiple problems to be tackled here:

System performance testing can require high levels of automation due to the sheer volume and scope of the tests. These tests will need to be repeatable and the cost of doing these tests manually is high.

If any of your system requirements are performance related, if your system doesn't meet these, then it may not be fit for purpose and could require significant rework causing months of delay. It is certainly better to know you have satisfied the performance requirements earlier in the project when the cost of change is smaller.

Architectural choices can make it difficult to add tests later

When implementing a solution it's not just the lines of code that need to be tested. Architectural choices have a huge influence on the success of a product, and getting these choices right can mean the difference between creating an elegant solution or creating an unwieldy beast. Establishing your testing layers early will give indicators as to the appropriateness of your architectural decisions, if it's hard to establish these tests, then it may be necessary to revisit your technology choices.

A simple architectural diagram is often sufficient to highlight potential complexities, one of the many reasons that diagramming is an essential tool for engineers.

See Testability Is An Architectural Choice for more detail on this point.

Starting late — What to test?

If you do find yourself in the position of having to retrospectively apply automated testing, what is the best way to approach this?

When backfilling testing, ask what it is that you are testing? It's not just about establishing a level of code coverage (an often misleading metric), it's about testing what the product should do, and how do you identify that?

Wrap the system in a test harness

One option is that you put in place tests to validate the existing system behaviour and ensure that regression does not set in. This adds value, and provides a base to build from.

Public API

Your clients will be using your public API making this a great place to add value immediately. The goal here is to validate the expected behaviour of the API endpoints and not the underlying methods. As mentioned earlier, if your system has not been developed with testing in mind, then it is likely you will need to refactor the underlying methods. Focusing on the API behaviour rather than the underlying methods reduces the amount of rework required during this refactoring.

Test Only New Features

Begin by applying the new testing regime solely to new features, ensuring any new changes are accompanied with a complete set of tests in all the appropriate tiers.

Work from Existing Acceptance Criteria (AC)

Another option is to refer back to the acceptance criteria used to help shape the system. The drawback with this approach is it's likely these ACs will be weak as testing was not a high priority when the ACs were created. Because testing was not high on the agenda during AC construction, the AC process will have lacked the necessary rigour which would have identified edge cases and anomalies.

You should expect the existing ACs to throw up as many questions as answers.

Automation of Manual Test scripts

If you are transitioning from manual testing, you may have test 'scripts' with much of the detail you need. Collaboration between Development and QA is crucial here, and focus on the most frequently run and highest value test cases first.

Is it ok to sometimes skip automated testing?

Surely we can save some time by not writing all these tests... Sometimes a looming deadline will prompt stakeholders to suggest that the date should take precedence over quality and the team may be encouraged to skimp on testing — this is a bad idea. If the deadline can not be moved, then a reduction in scope is the other option. However, there are occasions when it may be valid to reduce or omit automated testing. Here are a few examples.

Investigation work / Spike

Investigative work is a good candidate for not creating automated testing. You are engaged in a small task to learn about a technology or problem space. There is little if any value to adding automated testing here as it's likely that everything other than the documented findings will be discarded after the exercise.

Demos

Demos are used to showcase your product, they aim to win over new and existing customers. As such the software will be based on your existing product, and as such should have an automated test suite behind them. This is not the time to omit testing.

POCs

It really depends on the scope of your POC. We're assuming the POC is bigger than a spike and involves an integration with a third party to enable new functionality. The complexity of the POC may be high which requires an element of automated testing.

It is likely that the POC will culminate in a demonstration. For either a demo or POC, failure can be embarrassing, and in front of the wrong audience failures can kill a commercial opportunity. For POCs limit the scope, test complexity, and plan your demo.

Conclusions

Automated testing is a game changer for your systems, it enables you to execute thousands of tests thousands of times a day, at a scale that could never be achieved with manual testing. Fast feedback and frequent testing results in an increased confidence in the code base, meaning that deployment frequency can increase, to the point that they can happen many times a day.

We've seen that having to apply automation retrospectively is hard work and presents many new challenges ranging from technical issues such as refactoring existing code, and potential design changes, to non-technical issues such as resetting the expectations of stakeholders on the perceived slow down in feature deliver and the need to address areas of unexpected behaviours.

Embrace automated testing from day one, it sets the precedent, reduces risk, and improves quality. So don't put it off, automate from the outset.

Title Image: Crm software vectors created by vectorjuice