Introduction

This article looks at the tools and libraries available to assist in component testing an application that uses Kafka as the message broker and Confluent Schema Registry for managing the schemas for Avro serialized messages. The application under test, and the external resources, are brought up in Docker containers. This is managed by Testcontainers, and orchestrated by the Component Test Framework. The component tests then send events to the Kafka broker, and consume the resulting outbound messages.

The component tests are demonstrated in the companion Spring Boot application that is detailed in the article Kafka Schema Registry & Avro: Spring Boot Demo.

The source code for the Spring Boot demo is available here:

https://github.com/lydtechconsulting/kafka-schema-registry-avro/tree/v1.0.0

Component Tests

Overview

Component tests are coarse grained tests. They treat the system under test as a black box, typically using its REST APIs or sending in messages via the broker to trigger processing, and asserting on the outcomes. These tests layer on top of the integration tests that were covered in the article Kafka Schema Registry & Avro: Integration Testing. While integration tests will mock or swap in in-memory versions of external resources such as the message broker or database, component tests spin up real versions of these, or simulators representing third party services, for the application to use.

Testcontainers

The component tests use the Testcontainers library to create, start, and manage the resources, such as the application under test and the Kafka broker, in their own Docker containers. At the end of the test run Testcontainers clean up the Docker containers.

Component Test Framework

The component-test-framework is an open source library by Lydtech that orchestrates the spinning up of the required Docker containers using Testcontainers. It provides utility classes for interacting with the different resources, such as to produce and consume messages from the Kafka broker. Applying a JUnit5 extension annotation to each component test triggers this set up once before the suite of tests execute.

Demo Application Component Test

Test Flow

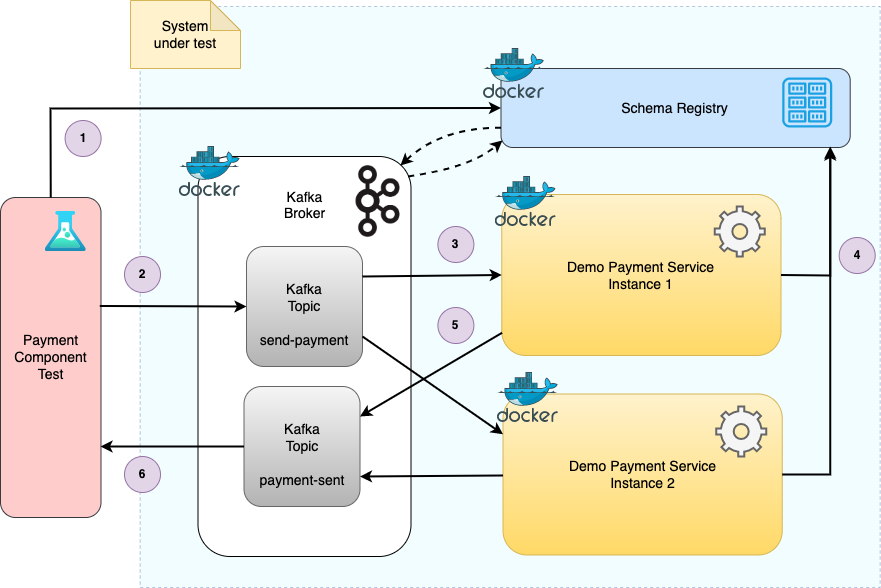

The following diagram shows the flow for the PaymentEndToEndCT test that sends in a number of send-paymentcommand events to trigger payments, asserting on the resulting outbound payment-sent events.

The component test setup hooks into the component-test-framework, which brings up the Dockerised Kafka broker, Confluent Schema Registry, and service instances. At this point the test begins.

The component test registers the schemas of the send-payment and payment-sent events with the Schema Registry.

The test sends multiple send-payment command events to the inbound send-payment topic. These events are serialized using Avro serialization.

The application instances under test consume the events, and acquire the schema Id serialized at the beginning of the message.

Each consumer instance looks up the schema from Schema Registry (which it then caches), and deserializes the events validating against the schema.

Once each event is processed a corresponding outbound payment-sent event is created. The application producer instance looks up the schema Id for the message from the Schema Registry and serializes this at the beginning of the message, and then writes this message to the payment-sent topic.

The component test consumes the outbound events, deserializes them using Avro, and asserts they are as expected.

Test Execution

Build the project, creating the Spring Boot application jar, from the root directory:

mvn clean install

Build the application Docker container:

cd schema-registry-demo-service

docker build -t ct/schema-registry-demo-service:latest .

Run tests (from parent directory or component-test directory):

mvn test -Pcomponent

Orchestrating Testcontainers

The component test uses the component-test-framework to orchestrate Testcontainers starting the Dockerised containers. Include the dependency in the component-test module pom:

<dependency>

<groupId>dev.lydtech</groupId>

<artifactId>component-test-framework</artifactId>

<version>1.8.0</version>

</dependency>

The resources configured in the component-test module pom’s maven-surefire-plugin definition will determine which containers will start. This should be part of a component profile. The following profile definition shows the configuration for this project (with some default configuration removed for brevity):

<profiles>

<profile>

<id>component</id>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<version>2.22.2</version>

<configuration>

<environmentVariables>

<TESTCONTAINERS_RYUK_DISABLED>${containers.stayup}</TESTCONTAINERS_RYUK_DISABLED>

</environmentVariables>

<systemPropertyVariables>

<service.name>schema-registry-demo-service</service.name>

<service.instance.count>2</service.instance.count>

<kafka.enabled>true</kafka.enabled>

<kafka.schema.registry.enabled>true</kafka.schema.registry.enabled>

</systemPropertyVariables>

</configuration>

</plugin>

</plugins>

The key configurations are the enabling of starting the Dockerised Kafka broker and Confluent Schema Registry, along with two instances of the application under test.

Add the @TestContainersSetupExtension annotation to the component test class, which is all that is required to instigate the component-test-framework orchestration of Testcontainers.

@ExtendWith(TestContainersSetupExtension.class)

public class PaymentEndToEndCT {

Schema Registration

The first thing the component test does is register the schemas for the Avro messages, SendPayment and PaymentSent. This will enable the Avro serialization and deserialization flows that are detailed in the Kafka Schema Registry & Avro: Introduction overview article. The source for these Avro classes is generated by Confluent’s kafka-schema-registry-maven-plugin in the avro-schemas module.

The component-test-framework provides a utility client class for interacting with the Schema Registry, KafkaSchemaRegistryClient. This includes a register schema method that the component test calls in its setup:

@BeforeAll

public static void beforeAll() throws Exception {

// Register the message schemas with the Schema Registry.

KafkaSchemaRegistryClient.getInstance().resetSchemaRegistry();

KafkaSchemaRegistryClient.getInstance().registerSchema(SEND_PAYMENT_TOPIC, SendPayment.getClassSchema().toString());

KafkaSchemaRegistryClient.getInstance().registerSchema(PAYMENT_SENT_TOPIC, PaymentSent.getClassSchema().toString());

}The first argument provided is the topic name. It represents the schema subject, with a -value suffix appended by the client to indicate that the schema applies to the message data, and not the key. The Avro generated source code provides a method getClassSchema() which is the JSON schema representing the message, and it is this that should be registered with the Schema Registry.

The KafkaSchemaRegistryClient then uses Confluent’s SchemaRegistryClient, which calls the Schema Registry REST API to perform the registration.

At this point the test is ready to run, and it sends in a number of send-payment command events, instructing the application to make a payment before emitting an outbound payment-sent event for each.

@Test

public void testFlow() throws Exception {

int totalMessages = 1000;

int delayMs = 0;

for (int i = 1; i<= totalMessages; i++) {

String key = UUID.randomUUID().toString();

String payload = UUID.randomUUID().toString();

KafkaAvroClient.getInstance().sendMessage(SEND_PAYMENT_TOPIC, key, buildSendPayment(payload));

if(i % 100 == 0){

log.info("Total events sent: {}", i);

}

TimeUnit.MILLISECONDS.sleep(delayMs);

}

List> outboundEvents = KafkaAvroClient.getInstance().consumeAndAssert("testFlow", consumer, totalMessages, 3);

outboundEvents.stream().forEach(outboundEvent -> {

assertThat(outboundEvent.value().getPaymentId(), notNullValue());

assertThat(outboundEvent.value().getToAccount().toString(), equalTo("toAcc"));

assertThat(outboundEvent.value().getFromAccount().toString(), equalTo("fromAcc"));

});

}

The test uses the component-test-framework KafkaAvroClient utility class to send the test events to the broker and consume and assert on the resulting events. As these are Avro messages this Kafka client also uses Avro for serializing and deserializing the events.

Remote Debugging

In the properties section at the top of the pom, the following property is added to allow it to be configurable for the maven surefire-plugin that executes the component tests:

<containers.stayup>false</containers.stayup>

The Docker containers can then be left up between test runs by running with this flag set to true:

mvn test -Pcomponent -Dcontainers.stayup

This results in Testcontainers not cleaning up the Docker containers at the end of the test run. The developer can now re-run tests without the time consuming start up of the Docker containers. They can therefore make changes to the tests and re-run them for immediate feedback as they are developed.

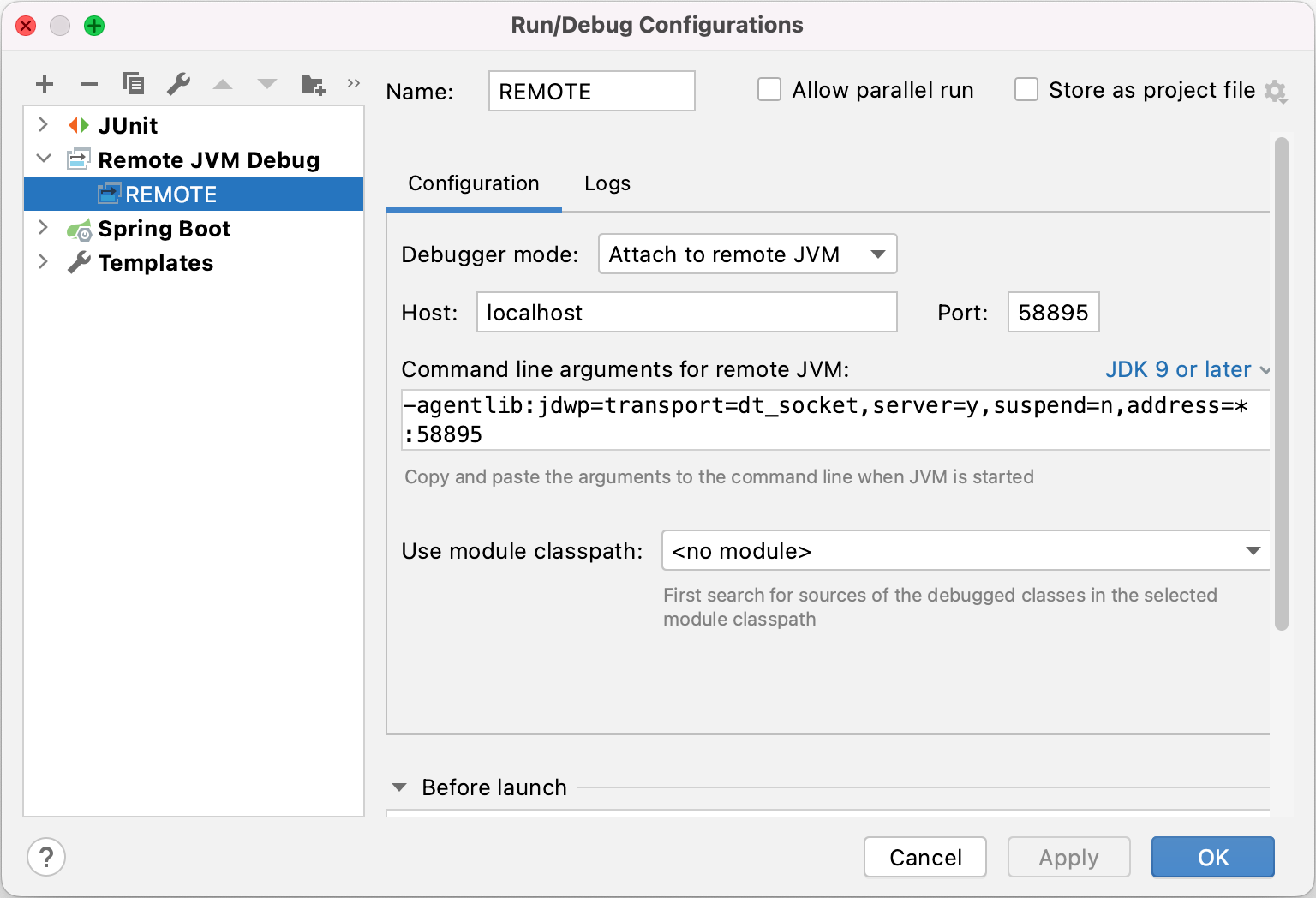

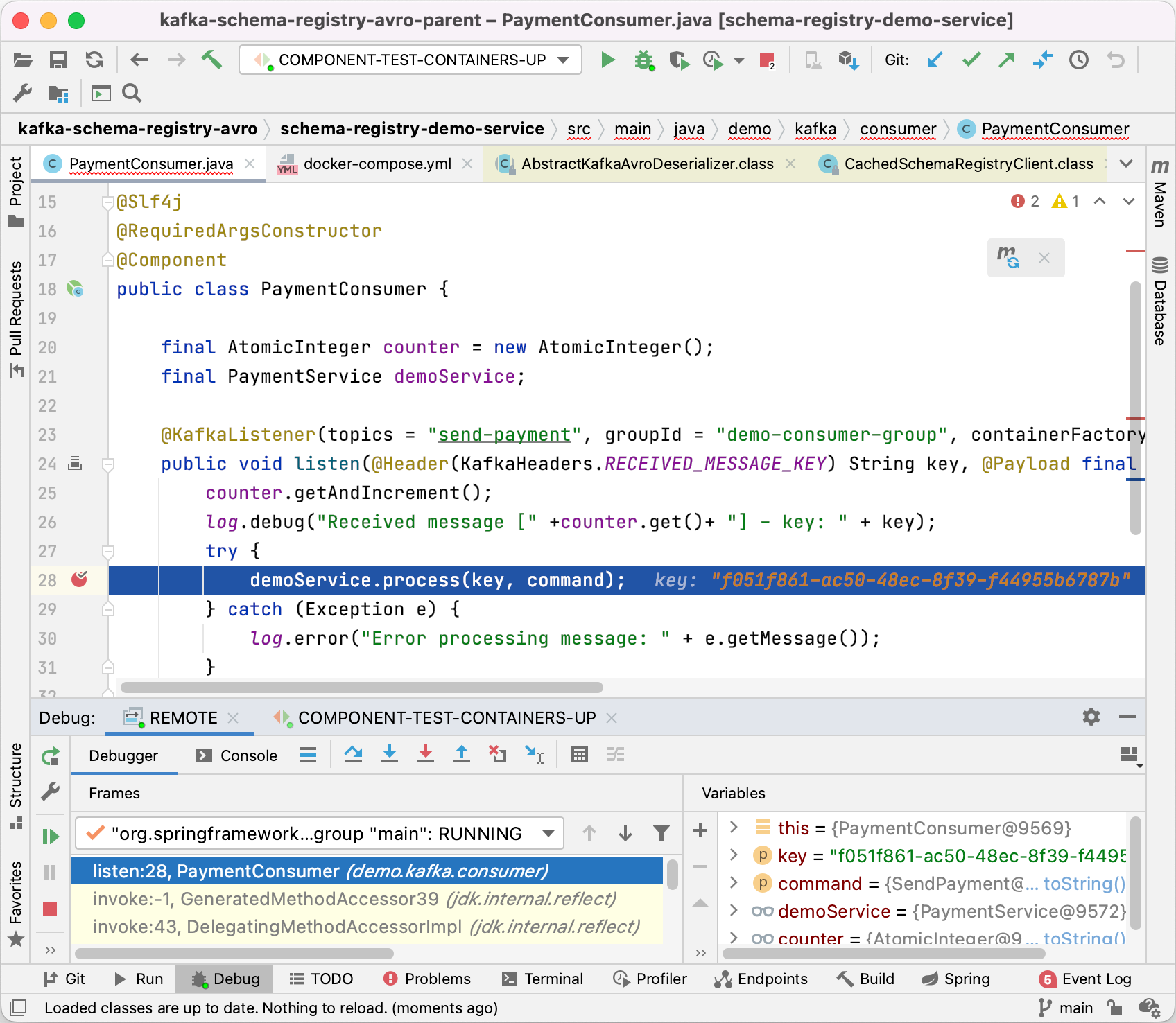

With the containers running the ports mapped to the 5001 remote debug port for the application instances being tested can be discovered by running:

docker ps

The output includes the application instance containers, for example:

ct/schema-registry-demo-service:latest 0.0.0.0:58895->5001/tcp, 0.0.0.0:58896->9001/tcp ct-schema-registry-demo-service-2

ct/schema-registry-demo-service:latest 0.0.0.0:58772->5001/tcp, 0.0.0.0:58773->9001/tcp ct-schema-registry-demo-service-1

In this case the ports to use are 58895 and 58772.

Then run the test within the IDE, and it will hit the breakpoints that have been set on the application code.

Note that with two application instances running it may be prudent to set up the remote debug for each as both will be consuming events.

Conclusion

Component tests validate that the end to end flows behave as required, and that the calls the application under test makes to external services work as expected. By utilising Testcontainers and the component-test-framework it is straightforward for the component tests to start Dockerised instances of the application under test, the Kafka broker and Confluent Schema Registry that they depend on, facilitating this black box testing.

Source Code

The source code for the accompanying Spring Boot demo application is available here:

https://github.com/lydtechconsulting/kafka-schema-registry-avro/tree/v1.0.0

More On Kafka Schema Registry & Avro

The following accompanying articles cover the Schema Registry and Avro:

Kafka Schema Registry & Avro: Introduction: provides an introduction to the schema registry and Avro, and details the serialization and deserialization flows.

Kafka Schema Registry & Avro: Spring Boot Demo (1 of 2): provides an overview of the companion Spring Boot application and steps to run the demo.

Kafka Schema Registry & Avro: Spring Boot Demo (2 of 2): details the Spring Boot application project structure, implementation and configuration which enables it to utilise the Schema Registry and Avro.

Kafka Schema Registry & Avro: Integration Testing: looks at integration testing the application using Spring Boot test, with the embedded Kafka broker and a wiremocked Schema Registry.

[cta-kafka-course]