Introduction

This fun little exercise walks us through deploying the Kafka Steams Windowing applications into a simple Kubernetes cluster. Once deployed in the cluster we can see these apps operating with data feeds, providing a great opportunity to observe and experiment.

Each window app that is deployed is covered in more detail in the article: Kafka Streams - Windowing Overview and the child articles

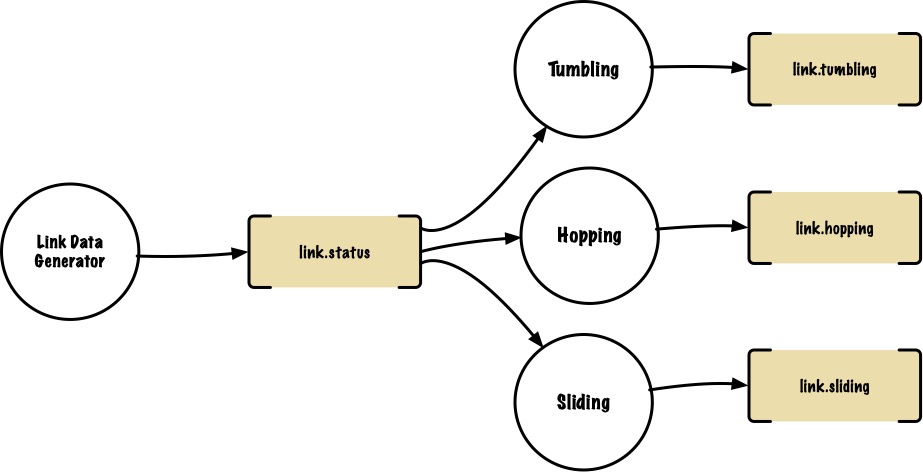

The system combines the windowing applications from the previous articles, and deploys them into a single cluster. The flow of events looks like this

The events are created in the Link Data event generator, and published to the link.status topic. Each windowing app consumes from the link.status topic, does whatever aggregation has been defined, and then publishes a final aggregation event to a topic specific to that app, e.g link.hopping is the output topic for the hopping window app. The use of separate topics isolates the events from the different apps so that we can more clearly see what each app is outputting.

All code can for this example can be found on github: Kafka Streams Windowing

The K8s Deployment

We're aiming for simplest deployment we can to demonstrate the various windowing types working with data in a 'live' environment.

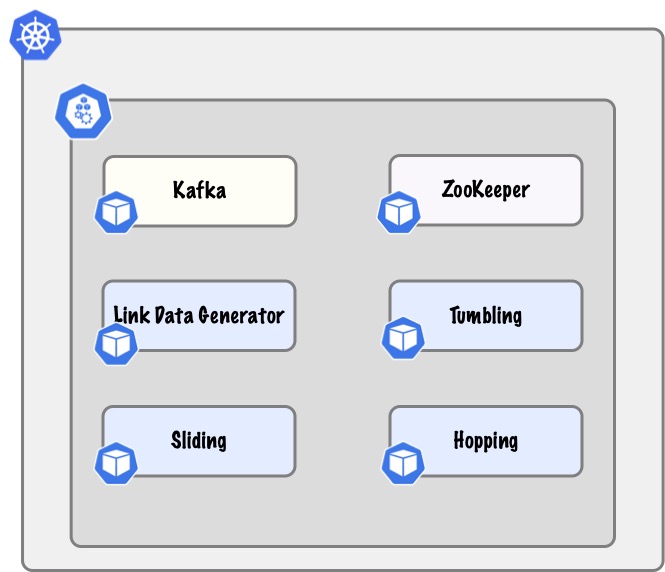

namespace - For isolation we will do all of this in a separate namespace, kafka-streams-windowing

nodes - We will run a cluster with a single node that will host the pods

pods - Each app will be deployed in its own pod (as a service). This includes pods for kafka and zookeeper

We will also use Minikube so that we can run all of this locally. I've written this on a Mac, but with the exception of the helper bash scripts later on, it should work wherever you are

Simple! so let's start.

Run in K8s - Setup

Clone the project

All code can for this example can be found on github: Kafka Streams Windowing

Clone the repo, have a nosey around (It will make more sense if you've previously read the overview article)

k8s - Minikube

For the K8s we will use minikube. A download/install guide for MiniKube can be found here: MiniKube Download & Install Guide

Start Minikube

minikube start

Start Dashboard

In a different terminal start the dashboard

minikube dashboard

Configure to use minikube internal docker as docker host. If your deployments are failing to find the correct docker images, there's a good chance you forgot this step... There's questionable wisdom in putting a reminder about forgetting this step in a comment above the step you may have forgotten... :)

minikube docker-env

eval $(minikube -p minikube docker-env)

Build the Apps & Images

Build the apps. Go to your checked out project and build with mvn.

mvn clean install

Then build the docker image which so that it’s installed in the minikube registry, instead of the local one.

The script dockerBuild.sh will build all the apps for you. If you wish to build the apps individually (or you're on a non-unix system), just copy the relevant command from within this script

sh dockerBuild.sh

Apply the k8s configs

The following scripts create the environment for us and deploy the stream apps.

kubectl apply -f k8s/00-namespace.yaml

kubectl apply -f k8s/01-zookeeper.yaml

kubectl apply -f k8s/02-kafka.yaml

kubectl apply -f k8s/04-link-data-generator-app.yaml

kubectl apply -f k8s/05-tumbling-window-app.yaml

kubectl apply -f k8s/06-hopping-window-app.yaml

kubectl apply -f k8s/07-sliding-window-app.yaml

The deployment will be in the kafka-streams-windowing namespace. Once the services have sorted themselves out and established connections, you should be able to see them all running via the minikube dashboard

When using the UI remember to change the namespace (dropdown highlighted) or you'll see nothing relevant to the commands you've issued.

Observable outputs

What can we see?

The link-data-generator logs contain the records being emitted

Each window-app has logs containing:

- a peek of the ingested record

- a peek of the final event (window aggregation)

To view the logs, click on the ellipses (...) at the end of the pod entry in the list

Connect to the topic and consume the events

Log onto the kafka pod where we can interact with kafka and consume topics.

The following command allows you to jump onto the container with a bash session. You need to find the pod name to do this.kubectl -n kafka-streams-windowing exec -it <kafka-service pod name> -- /bin/bash

But, this command does the same as above, and discovers the kafka service pod name for you

kubectl -n kafka-streams-windowing exec -it $(kubectl get pods -n kafka-streams-windowing -o custom-columns=":metadata.name" | grep kafka-service) -- /bin/bash

note the nested kubectl command get the name of the kafka container to exec

List the topics available

/bin/kafka-topics --bootstrap-server localhost:9092 --list

Consume a topic

consume the outputs via the topic

kafka-console-consumer --bootstrap-server localhost:9092 --topic

e.g. kafka-console-consumer --bootstrap-server localhost:9092 --topic link.tumbling

Cleanup

Once you're finished you can either remove the deployment via the UI, or simply via the command line

kubectl delete namespace kafka-streams-windowing

The command will take a few moments as the contents of the namespace are being destroyed.

[cta-kafka-course]